Simplification of Retrieval Augmented Generation

RAG stands for Retrieval Augmented Generation, a buzzword that has flown over my head for months until recently. I am going to try my own way to explain why it really is such a big deal.

First, RAG combines two powerful approaches in artificial intelligence: retrieval and generation. This combo enhances our AI capabilities significantly, making it more useful and relevant in various applications. It helps improve their accuracy, relevance, and, as we are all experiencing with GPT-4, the ability to reflect. Along with the promise of a really clean explanation, I’ll even walk through installing AnythingLLM to implement your own home-baked RAG solution effectively.

It’s the closest you’ll ever get to saying, “Put the Zendesk down, hun; we’ve got RAG at home.”

The Mechanics of RAG in Artificial Intelligence

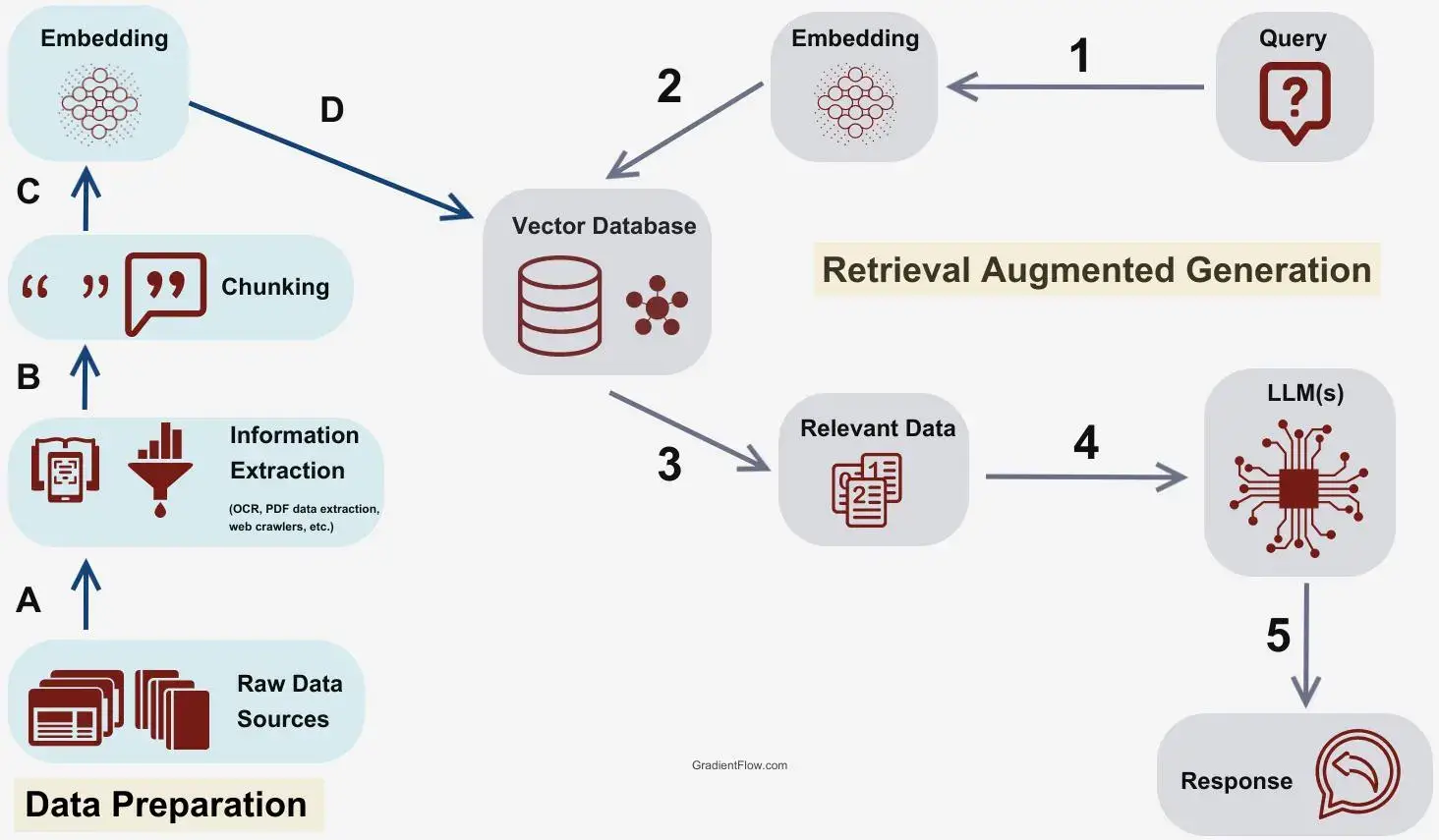

Now that we’ve set the stage, let’s get into the nitty-gritty of how RAG works. At its core, RAG has a dual-component system: the retriever and the generator. Think of them as Batman and Robin, each playing their own role as a team (Robin obviously carrying the weight).

The retriever is the brainiac that searches through a vast corpus of documents or data to find the most relevant pieces of information. The speed and accuracy with which this happens are as if every single librarian that’s ever existed combined their forces to assist you, and only you.

Once the retriever hands over the expanded context from its knowledge store, the generator takes that retrieved information and weaves it into coherent, contextually appropriate responses. It’s like a master storyteller that could fix every M. Night Shyamalan movie plot ever.

To put this into perspective, let’s consider a couple of real-world examples. In customer support, a RAG-enabled chatbot can quickly pull up relevant answers from a knowledge base, ensuring that customers receive accurate help sooner. Or think about content generation, where RAG can assist writers by providing them with not only up-to-date facts and figures but also learning their writing styles, so they don’t sound like imposters.

| Industry | Application | Benefits |

|---|---|---|

| Customer Service | Personalized responses using customer history | Improved support quality |

| Legal Research | Searching case law and statutes | Enhanced research efficiency |

| Content Creation | Fetching facts for articles | Increased accuracy and engagement |

| Employee Onboarding | Providing real-time training information | Accelerated learning process |

| Customer Feedback Analysis | Integrating data for sentiment analysis | Improved customer satisfaction |

The architecture of RAG is revolutionary and is paving the way for enhanced interactions in various domains. It’s not just about generating responses; it’s about creating a smarter AI that understands its context and can provide information that’s current and relevant.

Why RAG is an Effective Application of Technology

Now you might be wondering, “Why should I care?”

Great question!

The advantages of using RAG over traditional LLM approaches are plentiful. For starters, it offers improved response accuracy. Traditional models often struggle with keeping their information up-to-date, leading to the dreaded “outdated knowledge” problem. GPT-O1 is dope as hell, but so much has changed since October 2023. RAG fills that gap by keeping the knowledge relevant.

Moreover, the adaptability is impressive. Whether it’s healthcare, finance, or education, RAG can tailor its responses to fit various domains seamlessly. For instance, in healthcare, an AI powered by RAG can provide real-time updates about the latest research or treatments, which is invaluable for both professionals and patients alike.

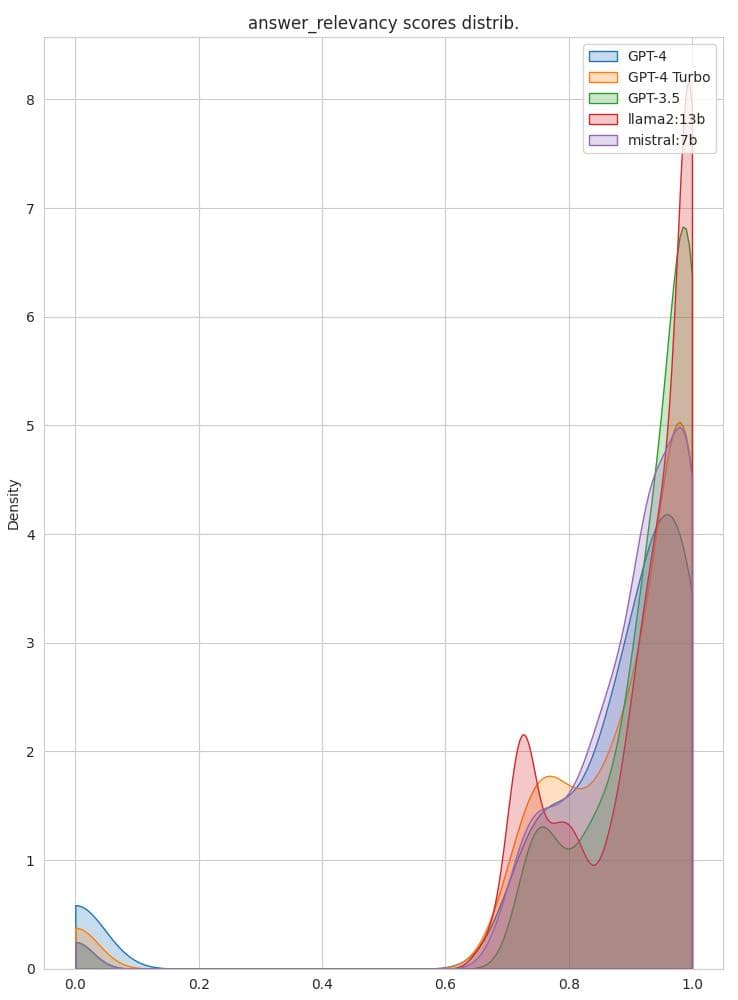

Research findings and case studies back up the effectiveness of RAG. In one study by Germany based TheBlue, a RAG-based model outperformed its traditional counterparts in user satisfaction and interaction quality, proving that users appreciate relevant, timely responses. The research highlighted not only the improved accuracy but also how RAG positively impacts user engagement.

Key Components of AnythingLLM

Now, let’s talk about AnythingLLM and how it fits into all of this. AnythingLLM is a framework that’s designed to work harmoniously with Retrieval Augmented Generation. What is groundbreaking about this is how accessible the technology becomes.

At its core, AnythingLLM boasts a user-friendly interface with customizable templates which simplify the integration of RAG. This means you don’t have to be a coding wizard to get started. Even if you’re a newbie, you’ll find that AnythingLLM provides a straightforward pathway to harness the power of RAG in your workflow.

As if I weren’t already threatening you with a good time, the community and support resources surrounding AnythingLLM are top-notch. Even though setup & install is fairly straightforward – you’ll still find a treasure trove of documentation where you can expaned your use case and troubleshoot issues.

Your Own Local RAG Solution in AnythingLLM

Alright, so now that you’re all hyped up about RAG and AnythingLLM, how do you actually get started? Fear not; I’m here to guide you through the process with a step-by-step approach if you haven’t already ditched the article to bing it (yes – I 100% meant what I just said).

First things first, you need to check your system requirements. Make sure you have the latest version of AnythingLLM installed on your machine. Once you’re all set, it’s time for some preliminary configurations. All the major points and clicks are featured below.

Conclusion

Tying everything together, RAG has been quite a gamechanger in AI innovation and further transforming the way we interact with technology. By seamlessly blending retrieval and generation, RAG provides us with accurate, relevant information that enhances any experience. Whether you’re in customer support, content generation, or any other domain, the potential applications of RAG are boundless.

AnythingLLM serves as a powerful tool for implementing RAG, offering user-friendly features that make it accessible for everyone. So, whether you’re a seasoned developer or just starting out, you have the resources to take advantage of this cutting-edge technology.

FAQ

What is RAG, and why is it important in AI?

RAG, or Retrieval-Augmented Generation, is a powerful approach in AI that combines the strengths of retrieval and generation. It allows AI models to access real-time information from vast data sources, improving response accuracy and relevance. This is crucial because traditional AI models often rely on outdated knowledge, which can lead to less helpful interactions.

How does RAG enhance the capabilities of LLMs?

RAG enhances LLMs by integrating retrieval mechanisms that allow them to fetch up-to-date information. This means LLMs powered by RAG can provide users with responses that are not only coherent but also relevant to current events and trends. This capability addresses one of the major limitations of traditional LLMs, which often rely on static datasets.

What makes AnythingLLM a great choice for implementing RAG?

AnythingLLM is an excellent choice for implementing RAG due to its user-friendly APIs, customizable templates, and scalability. It simplifies the integration process, making it accessible for developers of all skill levels. Additionally, its robust community support ensures that users have access to the resources they need to successfully implement RAG.

Can RAG be used in specific industries like healthcare or finance?

Absolutely! RAG is highly adaptable and can be tailored to various industries, including healthcare and finance. In healthcare, for instance, RAG can provide real-time updates on medical research and treatments. In finance, it can pull in the latest market data, helping professionals make informed decisions quickly.

What challenges might I face when implementing AnythingLLM for RAG?

Common challenges include connectivity issues, configuration mismatches, and performance optimization. However, the supportive community around AnythingLLM and the wealth of resources available can help you troubleshoot these challenges effectively

How do I ensure my RAG implementation remains effective over time?

To keep your RAG implementation effective, consider regular updates and establishing feedback loops. This will help your AI adapt to new information and user needs, ensuring it remains relevant and useful in a rapidly changing environment.